Bringing automated measurement to security frameworks

The case for automation in security measurement is well-evidenced and its benefits are well-documented, from minimizing teams’ reporting workloads to providing continuous visibility on control drift.

The move to automated measurement has even resulted in the creation of a new technology category by Gartner – Continuous Controls Monitoring (CCM) – in which Panaseer is listed as an inaugural vendor. One of the other benefits of automation is standardization. By automating, we standardise how we measure, ensuring consistency and accuracy of measurement, making insights comparable with one another and improving their interpretability and impact. Despite much interest and investment in the topic, we have not yet managed as an industry to standardise what we measure.

Assessing the implementation of security frameworks

Whether a framework is for recommended or legally mandated controls, security teams have a need to measure how well they have implemented these. However, security frameworks do not typically provide measurement guidance themselves, leaving security teams to design their own metrics.

Control frameworks are not always simple to assess against. One metric is often not sufficient to capture the success of a control implementation (for example there are usually at least two needed – one to measure coverage and the other to performance) and correctly designing measurements is challenging, but crucial to ensure any assessment is valid.

Panaseer created a rich Control Checks and Metrics Catalogue to address this need – providing high-quality metrics which align to common industry frameworks. Organizations have a hierarchy of policies, controls, standards and procedures.

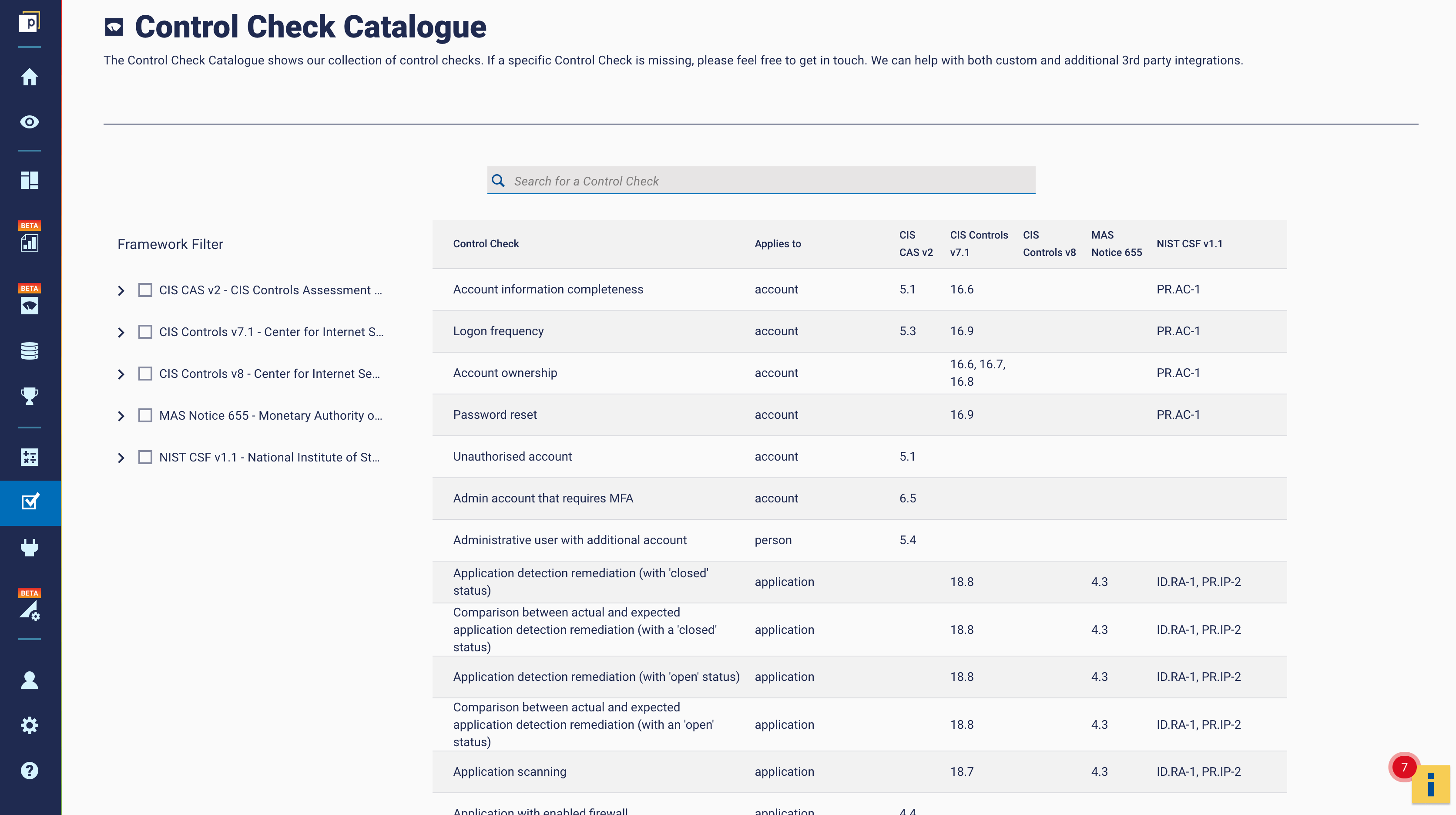

A Control Check in Panaseer is a way of condensing and codifying this into a simple assessment (the ‘Check’) that can be applied to control data to identify whether the standard has been met, or not. The Control Checks Catalogue is a collection of these common controls, which can be configured to reflect an organisation’s own standards. It looks something like this on our platform:

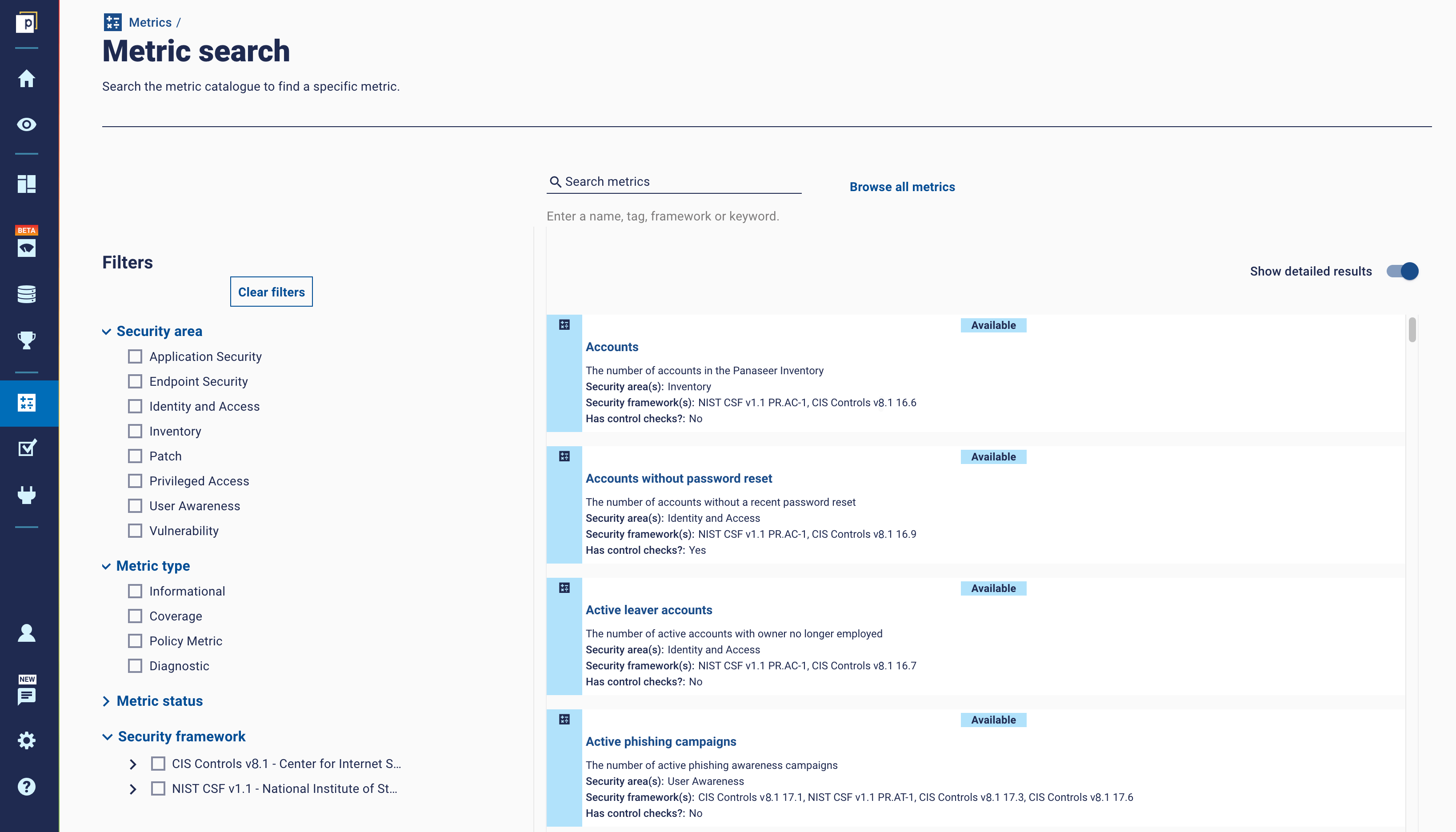

The Metrics Catalogue is a selection of configurable metrics, which provide organisations with a range of insights, from assessing compliance with standards (e.g. the % of assets which are failing a particular Control Check), helping diagnose common issues and identify outliers, to demonstrating the success of security programmes to senior leadership. Here's how it looks on the platform:

Improving measurement standardization

While Panaseer Control Checks and Metrics Catalogues provide standardisation, benchmarking and improved communication within an organisation, there are, of course, many possible ways to measure.

How does the framework author interpret what success looks like for their recommendations versus how security teams interpret this? Does ‘aligning security metrics to CIS V8’ mean the same to everyone in the industry? In order to standardize more widely, it’s important to have a set of measurements designed at an industry level.

As Phyllis Lee of CIS in the Metric of the Month article that focuses on security controls frameworks says, ‘The author of a framework should be the authoritative source on how you measure success in that framework’. CIS is leading the way in this respect, having created their Controls Assessment Specification v1 in 2019, updating to v2 this year, which documents the metrics they recommend to assess every sub-control (now `safeguard`) in their controls framework.

Creating a data-driven version of the Control Assessment Specification

This brings us back to automation. The taxonomies of many security frameworks have a theme of self-attestation running through them and rely on prose rather than code to describe controls. This is likely in part because these frameworks typically originated from a policy, rather than a technology, standpoint – and because different technologies could be used to enact the same policy.

The result of this is that it often leads to non-data-driven and/or qualitative measurement being used to assess performance against these frameworks. But as the complexity of the technology that we rely on to implement and monitor controls grows, this approach is becoming increasingly at odds with the daily reality of the multitude of tools and huge volumes of data that security teams face.

We should be codifying and quantifying our frameworks to align with our technology wherever possible – both for improved specificity and to enable our capacity for measurement to scale, as we turn to data, not people with checklists, to drive this.

With these principles in mind, Panaseer has reviewed Implementation Group 1 of the CIS Controls Assessment Specification and translated it, wherever possible, into a data-driven version that enables continuous measurement. To support this, we have implemented a raft of new Control Checks in the platform, which will assist organisations in continuously monitoring their performance against the CIS Controls V8 framework. To take a couple of examples, here is a dashboard that shows compliance to IG1 by business unit:

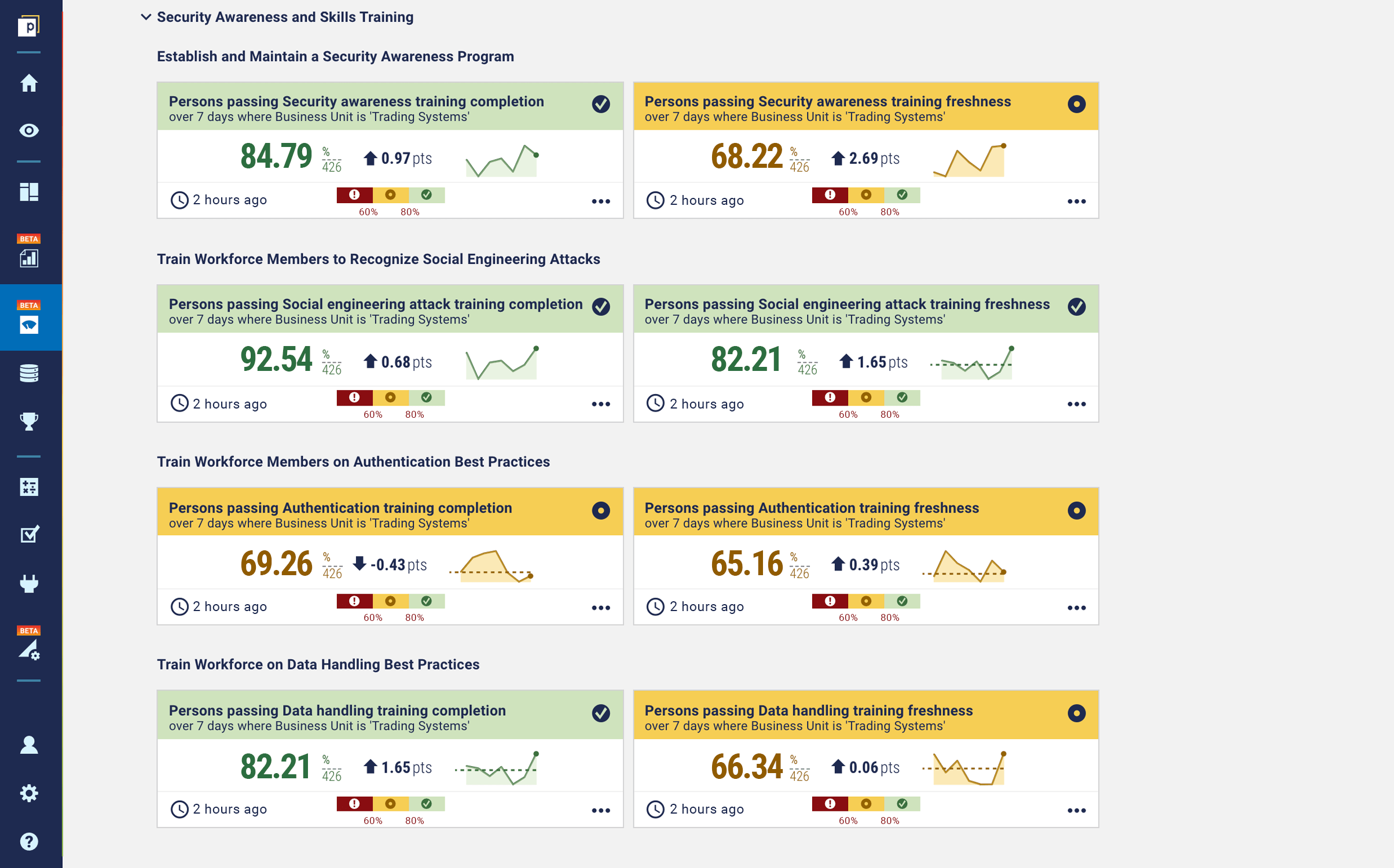

And here is an example dashboard that drills down further into the specifics, showing the status of security awareness controls:

What’s next?

With the number of security frameworks and regulations increasing, and the burden on security teams to report against them all, standardisation and automation of measurement is key. We’re excited to continue to partner with CIS to develop assessment specifications that natively support automated, data-driven measurement and are compatible with CCM platforms. If you're interested to learn more about how we implemented the CIS Controls Assessment Specification for IG1, get in touch.