How to prioritize patching with vulnerability outlier analysis

'Some risks you always have to live with. Eliminating all risks is rarely the goal. My goal is to fix the highest risk items that are the easiest items. There’s a balance. In my mind, neither side will ever win out. But if I can knock out 40% of my highs and criticals by patching a handful of vulnerabilities across the environment, I’ll go in for that.'

In the Metric of the Month series, we are collaborating with security and risk pros to talk about some of the most interesting and essential security metrics and measures. In this instalment, we catch up with James Doggett to talk about vulnerability outlier analysis. Jim’s experienced in risk and security leadership roles at various healthcare and financial services orgs and has been a big advocate of Continuous Controls Monitoring for several years now.

What is vulnerability outlier analysis?

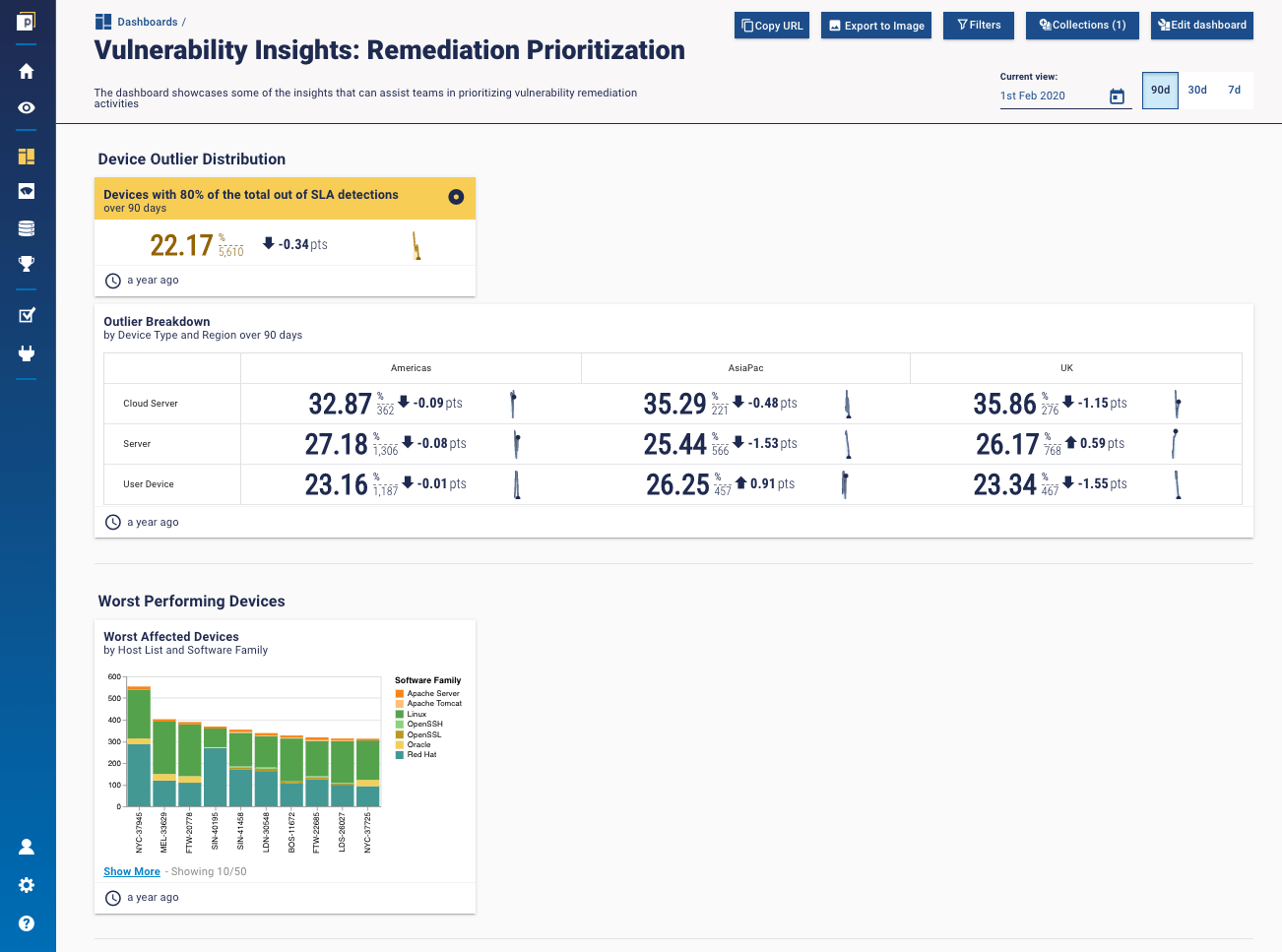

There are a few ways to look at outlier analysis, but the main theory is something like the Pareto principle. Very simply, the Pareto principle says that 20% of a population is responsible for 80% of output. When it comes to vulnerability outliers, you can apply that in a few ways. You might look at it in the context of 20% of your vulnerabilities are affecting 80% of your machines. Or that 20% of the vulnerabilities out there in the wide world make up 80% of the vulnerabilities in your environment. Or that 20% of your machines are housing 80% of your vulnerabilities. Getting this kind of insight into the vulnerabilities in your organisation can be extremely valuable when it comes to remediation prioritisation, for example, identifying the 'worst performing devices’ in your environment, like in the following dashboard.  Whichever way you look at it, Jim says, ‘It makes a whole lot of sense to apply the 80/20 rule. We’ve done that in risk forever’.

Whichever way you look at it, Jim says, ‘It makes a whole lot of sense to apply the 80/20 rule. We’ve done that in risk forever’.

Very basically what vulnerability outlier analysis works out is: ‘which vulnerabilities should I fix to get the most bang for the buck?’

You’ve got an entire population of vulnerabilities out there, whether it’s 1,000 or 100,000. Outlier analysis allows you to know the items that exist with common traits. There may be instances when you have just one of a specific vulnerability that could severely impact the business. Or you could have a vulnerability that exists throughout your environment affecting every machine. Very basically what vulnerability outlier analysis works out is: ‘which vulnerabilities should I fix to get the most bang for the buck?’ Say you’ve got one particular vulnerability that’s all over your environment. By patching that one vulnerability, you get ‘a whole lot of mileage’, making a big positive impact on overall risk to the organisation with a relatively small amount of effort.

How is it measured?

Jim noted a really important problem to keep in mind when you’re looking at vulnerability outliers: the mass of vulnerability data. ‘In my experience, before you apply the 80/20 rule to a population, it’s important to narrow the population down to those vulnerabilities that pose the highest risk to your business. You don't want to focus on the lower risk items before the higher risk ones.' Which vulnerabilities carry the most risk? Those that may cause a high level of incidents out in the world, or those that may affect critical applications. If it’s a marketing application, that may not be as important as a revenue-generating application.

Some risks you always have to live with. Eliminating all risks is rarely the goal. My goal is to fix the highest risk items that are the easiest items. There’s a balance. In my mind, neither side will ever win out. But if I can knock out 40% of my highs and criticals by patching a handful of vulnerabilities across the environment, I’ll go in for that.

Once you understand which vulnerabilities are low, medium, high, and critical to the business, you can start applying the 80/20 rule to the highs and criticals. Naturally, you want to go after these first. ‘Most companies I know will never get down to the low-risk vulnerabilities’, Jim noted. ‘They’re just trying to deal with the criticals and the highs. Now and again, they might get to the mediums, but nothing below that. It’s just not worth the time, effort or resource.’ 'Some risks you always have to live with. Eliminating all risks is rarely the goal. My goal is to fix the highest risk items that are the easiest items. There’s a balance. In my mind, neither side will ever win out. But if I can knock out 40% of my highs and criticals by patching a handful of vulnerabilities across the environment, I’ll go in for that.' Effectively measuring vulnerability outliers provides you with the information to do that. ‘Equally though, there will be scenarios where we need to fix one vulnerability that affects one machine just because it’s so critical or the risk is so high.’ That’s where the balance comes in.

Who needs to see vulnerability outliers?

First and foremost, the security team itself. Going back to basics, Jim spoke about the importance of reporting accurately and genuinely. ‘The CISO and the security team have been hired to manage the risk of security across the environment’. It is easy to fall into the trap of measuring and reporting what you fix, rather than how you reduce risk. ‘We told the story that sounded good’, rather than the full picture. Part of that is understanding that as security team your domain is security risk, ‘but you have to realise that you can’t fix it all yourself’. That leads to the people responsible for actually fixing the vulnerabilities you’ve highlighted with your outlier analysis – the IT team. Then you have regulators and auditors, who will also be interested in metrics like these. ‘Like it or not, they’re going to be there, so you might as well prepare and have the metrics ready for them. The alternative is that they come in and you have to stop everything you’re doing and devote time to them’. Then you have the CISO’s boss. The title may change, but you’ll have to report to this person a more overall view of where your risk is and how you are managing it. ‘They don’t need to know as much of the detail’, Jim noted, but they will still want to understand how you’re managing vulnerabilities, so vulnerability outlier analysis could be a good metric to show them. If you have a particular board-level initiative to clean up vulnerabilities, then you’d also have to report this level of detail to the board. Otherwise, this wouldn’t make it into your usual board-level summary.

What advice would you give to teams getting started with vulnerability outlier analysis?

As we said earlier, you want to get the most ‘bang for the buck’ you can. That means prioritising high/critical vulns, finding easy wins, and reaching a good balance between the two. This could be some particularly useful advice for those operating in environments that are less mature. ‘In a less mature environment’, Jim noted, ‘you have less resources, so you’re probably not patching your systems as often. That’s when ‘bang for the buck’ becomes a real focus’. For a less mature environment, Jim also recommended that you could develop outlier analysis by looking at the root cause of vulnerabilities. Maybe your standard builds are flawed. Maybe your patching programme is haphazard or inefficient. Maybe it’s difficult to shut down systems to do the upgrades. Maybe some patches could cause more problems than they fix. There are all sorts of challenges. ‘The difference between a mature and immature environment is the level of ability to permanently fix issues based on root cause’. And this is good advice not just for vulnerabilities, but for security and risk teams in general.

Where do you see challenges in measuring vulnerability outlier analysis?

The hard part in my mind is: Are your vulnerability scanners giving you full coverage? How do you make sense of all the data you do have?

‘As always, it depends on what resources you have available’, noted Jim, which is something that all risk and security pros say, I’m sure. He highlighted a few key challenges. Vulnerability scanners produce a lot of data, which can be hard to process. It’s hard to know if they are scanning everything they should be. And, they don’t provide context with that data. ‘The hard part in my mind is: Are your vulnerability scanners giving you full coverage? How do you make sense of all the data you do have?’ 'A scanner tool only knows what it scans. It doesn't know what it doesn't scan. So that's a gap right there that could get you into trouble. It also doesn't know the inherent risk of the vulnerability to my environment. It doesn't know if it's on a machine that I don't care about or if it's a machine that's mission-critical.’ That’s where you want a tool that can consolidate that information. Then you can compare your actual environment to what your vulnerability scanner sees and fix the gap in your scanner. That’s where Continuous Controls Monitoring comes in.

How can Continuous Controls Monitoring help?

As a new category, CCM is helping to overcome challenges like these. It highlights gaps in controls coverage by aggregating data from multiple tools in the environment, whether across security, IT or the business. ‘I hear so often’, Jim notes, ‘people saying we can’t afford to do that yet. But I’m not sure you can afford not to do that.’ These kinds of coverage gaps, not just in your vulnerability scanner, but in other tools as well ‘create a tremendous amount of potential risk’. According to Jim, there are three options to try do Continuous Controls Monitoring:

- Use a tool that’s out there, which in Jim’s mind is probably the most efficient and cost-effective (you’ll see why in a minute).

- Hire a bunch of people to do it manually, which can mean errors or gaps, and it’s expensive. 'I've done this approach', says Jim, ' and it never seems to be fully complete or accurate'.

- Build it yourself.

You've got to find a way to get current, accurate data. If you’ve got that, as a CISO, you can stay out of trouble.

Around four years ago, Jim was running a team that tried to implement some form of Continuous Controls Monitoring. Here’s how it went: ‘I hired a team to do this. They would manually pull the data from each of these vulnerability sources and try to consolidate that data into one list. Now, that's not easy, because you've got duplicates, you got gaps, you got overlaps. One tool may call a machine by its MAC address, the other by an IP address, all kinds of problems come up. ‘That takes a lot of people. Not to mention it's very difficult to give timely data. By the time you've collected the data, it's already days or weeks stale, which to me makes it somewhat less useful. So that’s why I don't really think you have a choice in this. ‘You've got to find a way to get current, accurate data. If you’ve got that, as a CISO, you can stay out of trouble.’ Bringing it back to security metrics, the key is to automate as much of the security measurement process as possible to get that 'current, accurate data'. It's valuable across the board, but particularly with vulnerability outlier analysis, which is an ever-shifting measurement for security teams. If you want to keep up to date with Metric of the Month, sign up for the newsletter.