How to improve vulnerability prioritization and remediation with business context

September 14, 2023

With security teams under constant pressure to tackle urgent problems, it’s vital that resources are focused where they’re needed most through effective vulnerability prioritization.

As organizations continue to digitize their operations and attack surfaces grow ever larger, the inconvenient truth is that not all cybersecurity vulnerabilities can be addressed in a timely manner.

A recent Ponemon Institute survey found two-thirds of security leaders have backlogs of over 100,000 vulnerabilities and growing. A separate study put the median vulnerability remediation rate at 15.5% per month (meaning organizations only have capacity to remediate 15 out of every 100 vulnerabilities), which would be bad enough if published vulnerabilities weren’t also rising by around 25% a year.

This emphasizes the importance of working out how to prioritize vulnerabilities to fix those posing the greatest risk to your organization. But even established methods for prioritizing vulnerabilities have serious shortcomings, particularly in accurately contextualizing risk for individual businesses.

In this article we’ll look at:

- What is vulnerability prioritization?

- CVSS and EPSS models for prioritizing vulnerabilities

- Why is it challenging to prioritize cybersecurity vulnerabilities?

- Why is context important to vulnerability prioritization?

- How Continuous Controls Monitoring (CCM) helps improve vulnerability prioritization

What is vulnerability prioritization?

Vulnerability prioritization is the process of ranking which security vulnerabilities to remediate based on their respective risk. The type of risk can be defined in different ways, such as the technical risk of exploit, or the risk to the business if the vulnerability is exploited.

Receiving publicly available real-time data on cybersecurity vulnerabilities is a valuable opportunity to anticipate likely attacks before they compromise your organization, and this intelligence can be further enriched by vulnerability prioritization tools.

By embedding vulnerability prioritization technology into their cybersecurity and risk management functions, organizations can ensure they have the biggest impact on improving their security posture by focusing limited resources to the most critical fixes, patches and remediations.

CVSS and EPSS models for prioritizing vulnerabilities

Many organizations have adopted standard frameworks for their vulnerability prioritization programs. This allows them to consume an expert interpretation of each vulnerability so that they can be ranked according to their potential impact and likelihood of being exploited.

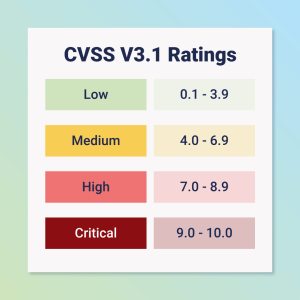

In CVSS (Common Vulnerability Scoring System), vulnerabilities are prioritized according to their ‘severity’; the higher the severity, the greater the urgency in remediating it relative to other vulnerabilities. To that end, SecOps teams could use this data to prioritize the most severe, and set internal SLAs to fix them within a maximum number of calendar days.

There are obvious limitations to this method which we discuss in more detail later in this article, but research also shows that CVSS score doesn’t appear to have a major influence on how vulnerabilities are prioritized. Cyentia found that, on average, vulns with a severity score of 0-3 were fixed fastest while those with a maximum severity score of 10 take the longest to get remediated.

EPSS (Exploit Prediction Scoring System) was established in April 2021 by FIRST (Forum of Incident Response and Security Teams) as an alternative approach to vulnerability prioritization. Unlike CVSS, it considers whether vulnerabilities are known to have been exploited. This is important because fewer than 10% of all known vulnerabilities have ever been exploited in the wild. A 2023 Qualys study found fewer than 5% of vulnerabilities published to the NIST NVD (National Vulnerability Database) in 2022 have actually been exploited.

Proponents of the EPSS approach (particularly the latest EPSS 3.0 version) point out the value of this additional contextual data when committing security teams’ finite resources towards vulnerabilities that pose greatest risk. It is an issue that NIST has also tried to address with its Known Exploited Vulnerabilities (KEV) Catalog.

We’ll explore why context is so important in further detail in a later section.

Why is it challenging to prioritize cybersecurity vulnerabilities?

Rather than treating each vulnerability equally, in the order they emerge, SecOps teams can prioritize vulnerabilities based on their underlying attributes and other considerations.

However, this simple principle is beset by a number of complicating factors, including:

1. Volume of vulnerabilities. Security teams can be overwhelmed by the number of new vulnerabilities emerging each day. Resource allocation is further compromised by the gradually accumulating volumes of older vulnerabilities that may pose risk to the business.

2. Limited resources. Cybersecurity skills shortages are well documented with many organizations operating with under-strength teams. Over-reliance on manual processes compounds this issue, reducing the resources available to remediate vulnerabilities.

3. False negatives. False negatives are when the risk presented by a vulnerability is miscategorized, and therefore overlooked; allowing them to be exploited. This can occur when the methodology for vulnerability prioritization is too technically aligned and not sufficiently grounded in other aspects such as business context and whether vulnerabilities are actually being exploited in the wild (rather than hypothetically in danger of being exploited).

4. False positives. Another symptom of a narrow or misaligned vulnerability prioritization methodology. Here, a false positive is a vulnerability given too much emphasis when in fact its true risk to the organization is much lower than thought.

5. Pace of technological change. IT environments change all the time, bringing new vulnerabilities into scope. This can allow unexpected changes to vulnerability management to slip through without appropriate prioritization. The same is true of the rapidly evolving threat landscape and emerging attack techniques continually “moving the goalposts”.

6. Overlapping vulnerability tools. Using multiple vulnerability tools offers benefits, such as broader coverage and redundancy, but can also cause duplications and inconsistencies of vulnerability data, coverage gaps, integration problems and workflow inefficiency. All potentially compromise accurate prioritization and offer up disparate, competing sources of truth.

The principal issue with prioritizing cybersecurity vulnerabilities is applying an appropriate and consistent methodology that’s optimized for managing business risk. Business context is particularly important in vulnerability prioritization and should be deployed alongside technical considerations.

Why is context important to vulnerability prioritization?

Earlier we touched on some of the issues surrounding vulnerability prioritization frameworks. CVSS, one of the most widely used, is excellent at focusing on the technical aspects of each given vulnerability (e.g. availability, confidentiality) but does this at the expense of their broader business impact.

CVSS scores are also static, so are unable to adapt to changing circumstances, though they are given added context from NIST’s KEV Catalog to help recognize which vulnerabilities have actually been exploited in the wild.

What’s missing in the framework approach is an appreciation of contextual factors that relate to an organization’s risk management. For example:

- Impact on regulatory compliance

- Impact on data governance

- Reputational impact

- Potential financial loss (e.g. loss of business, compensation payments, fines, etc.)

- Organization’s risk appetite/tolerance

- Business systems or processes that might be affected

Organizations must allow for contextual factors like these when using frameworks like CVSS and EPSS 3.0 as the foundation for their vulnerability prioritization.

The other dimension here is how vulnerabilities are assessed and managed within the context of each organization’s IT environment and service level agreements (SLAs). This context ultimately determines how ‘realistic’ a risk is in terms of its severity and impact.

The same vulnerability might pose very different categories of risk depending on whether – for example – it appears on 1 or 1,000 machines, or affects a non-critical internal system versus an externally-facing system hosting highly-sensitive customer information.

Likewise, an OS vulnerability may already be patched on 60% of devices, but not on the remaining 40% and – without understanding the full context – it can be difficult to assess how urgently resources should be deployed to fill that gap.

How CCM helps improve vulnerability prioritization

By enriching both controls and patch data with business context, including asset ownership and business processes, Panaseer’s Continuous Controls Monitoring (CCM) platform helps organizations target remediation efforts towards the most critical risks.

Armed with this more sophisticated method of prioritization, security teams can mature their patching policies to align more closely with risk management requirements. This includes evolving from policies based only on vulnerability properties (e.g. severity) to those that consider business context such as asset criticality.

CCM enables this prioritization process to be fully automated, with alerts triggered by predefined red/amber/green thresholds and remediation tracked against recognized frameworks and your organization’s security policies. This saves time, improves decision making and rapidly reduces your cyber risk.

Prioritizing remediation based on security policy failures

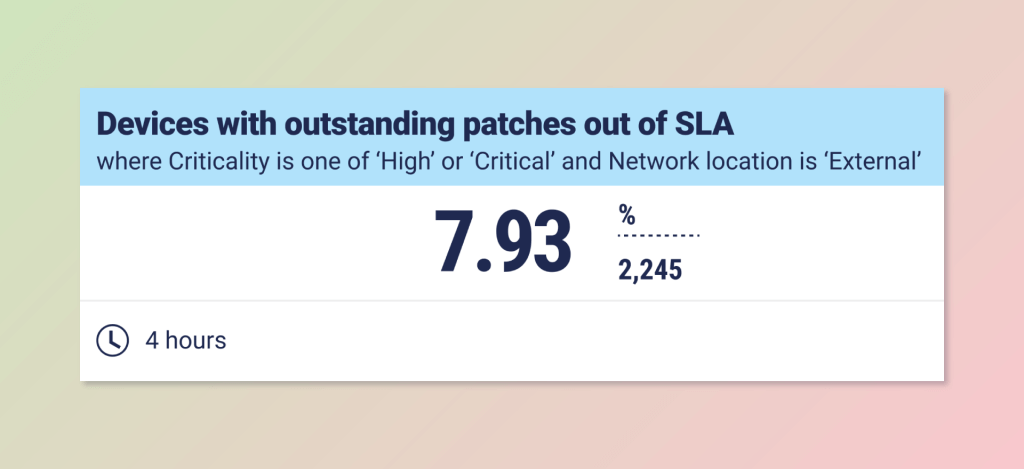

One common use case in the Panaseer platform is to prioritize remediation based on security policy failures. With a unified view of all vulnerability and patch data, Panaseer customers are able to drill down to identify patches that haven’t been applied within the service-level agreements (SLAs) set out in security policies.

This data can then be further refined to identify out-of-SLA (service-level agreement) patches that exist on business critical assets that are also on the external network. Security and IT teams can then prioritize devices that present the biggest business risk.

This type of analysis ensures CISOs can have a bigger impact on reducing security risk and improve adherence to internal security policies.

The final word

There are different ways to prioritize vulnerabilities depending on the maturity of your cybersecurity programme. As the single source of truth across your environment, Panaseer provides unique business context that enables you to get a true understanding of which vulnerabilities present the biggest risk to your organzation.

With CCM, you can also track progress of remediation and measure the improvements in your security posture as a result, reducing the likelihood of a costly breach.

Request a demo to see how Panaseer improves how you prioritize vulnerabilities and remediation, as well as transforming your approach to security posture management.