How do you measure cyber awareness culture?

For this edition of Metric of the Month, we spoke with Andreas Wuchner about measuring cyber awareness culture. Normally we would focus in on a single metric, as with our other articles, but as we started to speak about the basic cyber awareness metrics that organizations typically measure, it became clear that there is more to be said about the state of cyber awareness culture.

In our discussion, Andreas pointed out the need for organizations to shift towards a more cyber-aware culture, empowering people to become security assets, rather than cyber awareness being a simple tick-box exercise.

The cyber awareness culture challenge is this: a lot of organizations think that the user is the biggest source of your security problems. Not many have thought to transform the human behind the computer into the strongest spearhead at the first line of defense. If you see someone as a source of a problem, it's very different than if you think about empowering them to be an asset rather than a liability.

Andreas Wuchner

How important is measuring cyber awareness culture?

‘It depends on who you talk to’, says Andreas. ‘In the past, measuring cyber awareness culture was definitely secondary’. Until recently, cyber awareness metrics have been treated by many as a tick-box exercise driven by regulations. ‘The regulator requires x number of hours of cyber awareness training per employee per year, and once that is done, the organization ticks a box and waits until next year. They require phishing simulation, so you run a campaign, see who clicks and who doesn’t, and there’s your result. Done.’

Should it be more important? ‘Absolutely’, says Andreas. ‘If you look at successful cyber-attacks in the last few years, something like 90% can be traced to human error. Even if it was 50%, that’s still really high. You have all these tools in place, but they don’t stop everything. That’s why it is still essential to empower people to get this number down. More modern organizations are looking to improve.

‘The difficulty is really caring about cybersecurity’, says Andreas. ‘The cyber awareness culture challenge is this: a lot of organizations think that the user is the biggest source of your security problems. Not many have thought to transform the human behind the computer into the strongest spearhead at the first line of defense. If you see someone as a source of a problem, it's very different than if you think about empowering them to be an asset rather than a liability.’

In the future, will more organizations improve? ‘Definitely’, says Andreas. ‘We will see more regulators and auditors asking about awareness and cultural programs. They are starting to recognize that the user is not just a source of problems but can be empowered’.

What are the challenges in measuring cyber awareness?

On the technology side, some challenges are common throughout security metrics. The tool only knows what it knows – it doesn’t give you data in context. Part of the problem in measuring cyber awareness culture is that data coming from tools, like the number of phishing clicks, measures just clicks, not the culture itself.

Similarly, do you even trust the data you are getting from these tools? Is the tool deployed properly? Is coverage optimized? Is the data accurate and timely? ‘Because only if you have consistent, trustworthy data, you can make meaningful information out of it’, says Andreas.

On the people side, it is really hard to build a cyber-aware culture. ‘Buying a new security tool is much easier than making everyone in your organization care deeply about the importance of cybersecurity’. Many people just see it as a hassle that gets in the way of them doing their job. You need to talk to everyone, educate everyone, and make it relevant for them. It’s really hard to get them to engage. Another tried and tested approach is to raise awareness of how cybersecurity is a ‘whole of life issue’.

If you can fix this message in the mindset of non-cyber people, you are halfway to winning the battle. People care when they see it can impact their personal life or their families. This step changes behaviors, which in turn become learned and more easily applied in the workplace. A lot of cyber awareness culture programs work with cyber champions – finding people in the organization who care about security and are willing to dedicate their time to improve the baseline security knowledge and practice of the team they work in.

They become advocates, who talk about security, check up on their peers, and drive that culture. This human-to-human element is much more effective than diktats from a faceless board. Some organizations may look to gamify cyber awareness. ‘You can have a Wall of Shame for the team with the worst click rates’, Andreas joked, but more seriously, a Wall of Fame can celebrate teams that are outstanding in their cyber awareness, who are advocates for the cyber culture you are trying to embed in the organization. These are the people who are security assets rather than liabilities.

How to measure cyber awareness culture

It can be very difficult to measure cyber awareness culture within an organization. It is a matter of course that organizations will measure basic things like whether people have completed the cyber onboarding training, or how often people click on phishing campaigns.

This is often driven by regulatory requirements or frameworks. Indeed, the Security Controls Framework (SCF) has eleven controls around security awareness and training, and NIST groups them in the ‘protect’ function. It is notable, however, that not all regulations require a security awareness element, such as ISO27001 and COBIT – perhaps this is a hangover from when cyber awareness was thought to be a ‘secondary’ aspect of security.

You can measure these controls relatively easily, and thus prove to the regulator that your organization is compliant. ‘But do you know anything about the cyber awareness culture?’, asks Andreas. This tick-box attitude doesn’t really help you to reduce risk in the organization.

‘In the future’, says Andreas, ‘the auditor or regulator will change their line of questioning: ‘Do you have a training program? Yep. Has everyone done it? Yep. Is it effective? I don’t know.’ This is a very different question’.

Andreas broached the subject with a two-pronged example that highlights the difference between measuring security awareness and measuring security culture.

‘Say I give you a test of ten questions about cyber awareness. What makes a strong password? How do you spot a phish? Things like that. At the end, I see that you got six out of ten. Six is the threshold, so you pass.

‘But I don’t know if you actually know these things. Were you guessing? Did you get your assistant to take the test? Or a tech-savvy teenager you met at a café?

‘In a modern organization that measures culture, you will answer the same questions. But I will also measure how long it takes you to answer. I will ask how confident you are in your answers. That way I can learn much more about you as a user.

‘There might be someone who has a big ego, very bullish. They take the test and go: ‘I know security. Click click click click. How confident am I? Ten out of ten. I’m the hero’. But then they get five answers wrong. Even though they were super confident and thought they were super cool, turns out they’re not because they’re making massive mistakes.’

You can learn much more about the security culture this way. Over-confident users like this one are less likely to be careful. They won’t hover over emails to see that it is in fact not an urgent email from the CEO, but a phish from a rogue gmail in North Korea. This is indicative of a bad cyber awareness culture.

But where there are these kinds of users, you can also find users who are already a security asset. They have engaged with the training, understand personal digital security, and can confidently answer all ten questions quickly and correctly.

Over time and analysis, you start to get a picture of cyber culture.

Another thing that Andreas highlights to measure cyber awareness culture is around auto-locking screens. Many companies have a policy that when you leave a computer, you should manually lock it. And if you don’t, it will auto-lock after x minutes thanks to a group control.

In a work-office environment, it may be less likely to happen, as there are usually cyber-aware people around who could call someone out on leaving their screen unlocked. But so many more people are now in a home-office scenario, where there may be spouses, housemates, or children around to use the device without appropriate training or permission. Who knows what they might see or click on?

By tracking auto-locks as a metric, you can get some understanding of cyber awareness culture. You can see that people have read the guidelines and done the training, so they know that policy dictates that they manually lock their screen when it's unattended.

‘But in reality, they just leave it when they go out to lunch and it runs into auto-lock’, says Andreas. ‘So, then I know the culture isn’t as good as it could be and/or there is a problem with the training. Policy needs to be meaningful – it shouldn’t just be ‘do this’, it should be about showing people why these things are important’.

Andreas pointed out that the scope of this article doesn’t cover everything – he suggested some excellent resources on cyber awareness culture metrics and measurement. Effective questionnaires will measure things like employee trust in the organization’s cyber resilience, the extent to which they feel responsible for security, the quality of security resources and training available to them, and whether they feel they can be both secure and productive. Again, a sliding scale of confidence is a better measure than a simple yes/no option.

What does Continuous Controls Monitoring bring to cyber awareness culture?

‘Nobody really buys CCM solely for the sake of improving their security awareness – they buy CCM to lower their risk’. But as we said, 90% of cyberattacks are based on human risk. Awareness of the human factor risk is just one element in understanding your overall security and risk posture.

The reason that Continuous Controls Monitoring is so valuable to security measurement, in general, is that it combines data from multiple disparate sources that wouldn’t normally interact. A tool only sees what it sees, so if you rely solely on that tool for your metrics, you’re getting a narrow view that lacks context.

In the case of a cyber awareness tool, it may not know if there are new joiners, leavers or things like that. Combining data from the awareness tool and an HR tool provides that extra layer of context. Similarly, you may combine awareness data, auto-lock data, and privileged access data. Then you can see if people who have access to critical business applications are also leaving their screen unattended so that it goes into auto-lock. You can tell if they have passed security training from the awareness tool, but it doesn’t resonate.

‘If you have a lot of people with access to critical information who don’t care about cybersecurity, you need to have knowledge of that’, says Andreas, ‘because that means there is a much higher risk of something going wrong’.

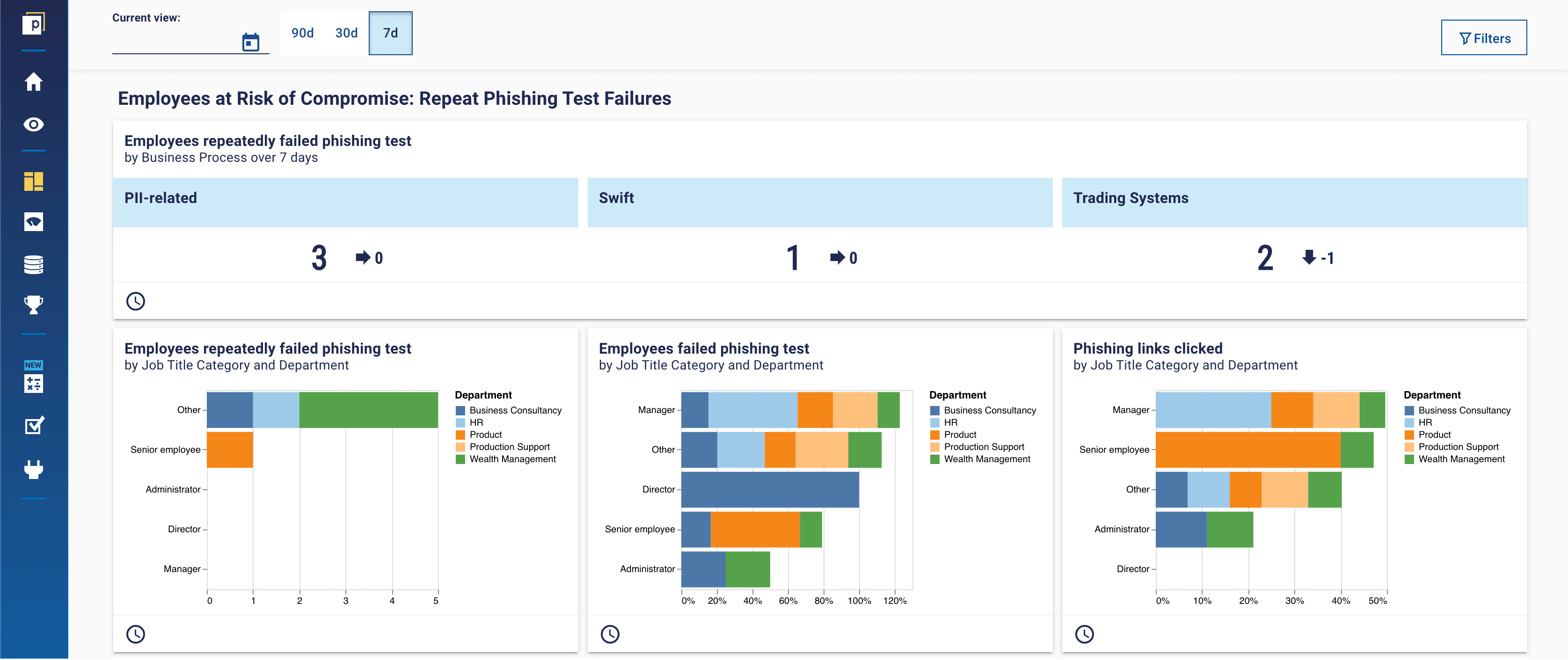

‘By combining data in different ways’, says Andreas, ‘you can start to ask really interesting questions about cyber awareness culture. Are all new joiners enrolled in mandatory training? Did they all get their first phishing test email in their first week? How often have they clicked, compared to people who have been with the organization for ten years? Do younger people click more or less? Do people with longer tenures click more or less? Which departments click the most? Do more managing directors click more or less than entry-level employees?’

Answering these kinds of questions provides much more meaningful insight than just looking at the number of clicks or auto-locks in isolation. You can use these answers to adjust your training accordingly. ‘You need to overlay information to get real understanding, value, and insight into your cyber awareness culture’.

Answering these kinds of questions provides much more meaningful insight than just looking at the number of clicks or auto-locks in isolation. You can use these answers to adjust your training accordingly. ‘You need to overlay information to get real understanding, value, and insight into your cyber awareness culture’.

The bottom line

I have never concluded one of these articles by quoting myself, but I listened back to the recording of my conversation with Andreas and when he asked me how we were going to continue with the article, I absolutely nailed it, so: ‘I think, really, the most valuable thing we've spoken about here is trying to shift away from just doing cyber awareness as a tick box exercise and towards the kind of culture that empowers human users to be an asset rather than hindrance’.